Whether you're scraping product prices, monitoring SEO results, or testing content availability across regions, integrating proxies into your requests workflow helps ensure reliability, scale, and access. In this guide, we’ll walk through exactly how to use proxies with the requests library – covering everything from basic setup to advanced use cases like rotating proxies and handling failures.

Why Use Proxies with Python’s requests Library?

Python’s requests library is a go-to tool for making HTTP requests in a simple, human-friendly way. Whether you’re building a quick API integration or scraping data from the web, requests makes it easy to send and receive data without a lot of boilerplate code.

But if you’re sending a high number of requests – especially to public websites or APIs – your IP address can quickly become a bottleneck. Many sites use rate-limiting or IP-based blocking to protect their infrastructure. That’s where proxies come in.

A proxy acts as an intermediary between your machine and the internet. Instead of your request going directly from your IP to a target server, it’s routed through another server (the proxy), which then forwards your request. This can bring several important benefits:

- Avoiding IP blocks: Rotate through multiple IPs to reduce the risk of being blacklisted.

- Bypassing geo-restrictions: Use IPs from specific countries or regions to access location-specific content.

- Anonymity and privacy: Mask your real IP address for added security.

- Testing and monitoring: Simulate requests from different geographies or networks to check how your services behave.

Types of Proxies Supported

Before diving into implementation, it’s important to understand the types of proxies you can use with Python’s requests library. Each type has its own use cases, benefits, and limitations. Let’s break down the most common ones:

HTTP Proxies

These proxies are designed to handle standard HTTP traffic. They’re commonly used for accessing websites over the unsecured http:// protocol.

Use case: Basic web scraping from non-secure endpoints or internal tools. Example format:

http://username:password@proxyserver:portHTTPS Proxies

Also known as HTTP CONNECT proxies, these are designed to tunnel secure HTTPS traffic (https://). They work with the same syntax as HTTP proxies but support encrypted connections.

Use case: Accessing modern websites, APIs, or anything that requires a secure connection. Example format:

https://username:password@proxyserver:portNote: Even when using HTTPS targets, you may still use http:// in your proxy string – the requests library will handle the secure tunneling under the hood.

SOCKS Proxies (SOCKS4/SOCKS5)

SOCKS proxies work at a lower level than HTTP/HTTPS, forwarding any type of traffic – not just web requests. SOCKS5 is the most flexible version, supporting both TCP and UDP traffic, along with authentication.

Use case: More advanced scenarios like anonymizing traffic, bypassing firewalls, or routing non-HTTP data. To use SOCKS proxies with requests, you’ll need an extra dependency:

pip install requests[socks]Then use it like this:

proxies = {

'http': 'socks5://user:pass@host:port',

'https': 'socks5://user:pass@host:port'

}

Proxy Types Overview

These aren’t different from a protocol perspective (they can be HTTP/S or SOCKS), but they differ in origin and perceived legitimacy:

- Residential proxies use real consumer IP addresses provided by ISPs. They are less likely to be flagged or blocked.

- Datacenter proxies are fast and cheap but easier to detect.

- ISP proxies blend residential legitimacy with datacenter stability.

- Mobile proxies route traffic through real 3G/4G networks, ideal for mobile-specific scraping or testing.

Basic Example: Using a Single Proxy in Python

Using a proxy with Python’s requests library is straightforward. The library allows you to specify a dictionary of proxies using the proxies parameter in your request. Here's a basic example of how it works: This request will be routed through the proxy server you provided. If everything is set up correctly, the response will show the proxy’s IP address instead of your own.

import requests

proxies = {

'http': 'http://your_proxy_ip:port',

'https': 'http://your_proxy_ip:port',

}

response = requests.get('https://httpbin.org/ip', proxies=proxies)

print(response.json())Basic Proxy Authentication (Username & Password)

Proxy services typically require authentication, done by embedding the credentials directly into the proxy URL:

proxies = {

'http': 'http://username:password@your_proxy_ip:port',

'https': 'http://username:password@your_proxy_ip:port',

}

Here’s the full example:

import requests

proxies = {

'http': 'http://user123:pass456@123.45.67.89:8080',

'https': 'http://user123:pass456@123.45.67.89:8080',

}

response = requests.get('https://httpbin.org/ip', proxies=proxies)

print(response.json())Setting a Timeout

It's a good practice to set a timeout on your requests, especially when working with proxies (which may slow down or fail to respond) – this avoids your script hanging forever in case the proxy is down or the site takes too long to respond:

response = requests.get('https://httpbin.org/ip', proxies=proxies, timeout=10)Advanced Proxy Authentication

If you’d prefer not to hardcode credentials (for security reasons), you can store them in environment variables or use a secrets manager.

Set environment variables on Windows (Command Prompt or PowerShell):

set PROXY_USER=user123

set PROXY_PASS=pass456

Set environment variables on Linux/macOS (Bash or Zsh):

export PROXY_USER=user123

export PROXY_PASS=pass456

To make these persistent across sessions, you can add them to your shell profile file (like .bashrc, .zshrc, or .bash_profile).

To access them in Python, use the os module to read them into your script:

import os

import requests

proxy_user = os.getenv("PROXY_USER")

proxy_pass = os.getenv("PROXY_PASS")

proxy_ip = "123.45.67.89"

proxy_port = "8080"

proxy_url = f"http://{proxy_user}:{proxy_pass}@{proxy_ip}:{proxy_port}"

proxies = {

'http': proxy_url,

'https': proxy_url,

}

response = requests.get("https://httpbin.org/ip", proxies=proxies, timeout=10)

print(response.json())

If the environment variables aren't found, os.getenv() will return None, so you can optionally add fallbacks or error checks:

if not proxy_user or not proxy_pass:

raise EnvironmentError("Missing PROXY_USER or PROXY_PASS environment variables.")

Optionally, use a .env file (with python-dotenv) if you prefer not to set system-wide environment variables. The .env file itself should contain this code snippet:

PROXY_USER=user123

PROXY_PASS=pass456

Then, use this Python code...

from dotenv import load_dotenv

import os

load_dotenv() # Loads variables from .env file

# Then use os.getenv() as shown above

... and install the package with:

pip install python-dotenv

Rotating Proxies (Manually or with a List)

When you’re sending a high volume of requests – especially to websites that monitor traffic – using a single proxy won’t cut it. To avoid IP bans and rate limiting, you’ll want to rotate through a list of proxies. This technique spreads requests across multiple IP addresses, reducing the chances of detection.

There are two common ways to implement basic rotation: random selection and round-robin cycling.

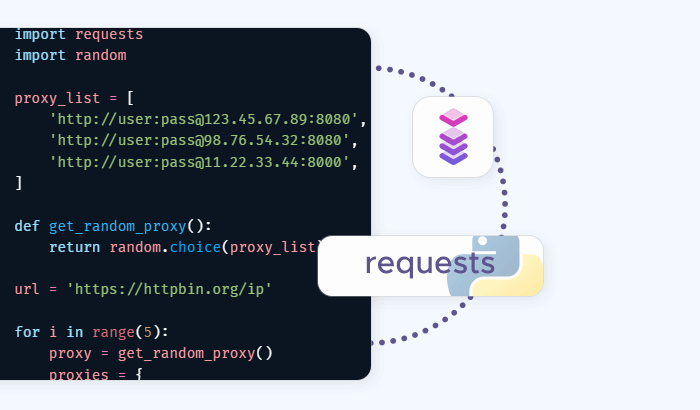

Option 1: Random Proxy Selection

Randomly selecting a proxy for each request adds a layer of unpredictability. This is simple to implement and works well for small to medium-scale scraping tasks.

import requests

import random

proxy_list = [

'http://user:pass@123.45.67.89:8080',

'http://user:pass@98.76.54.32:8080',

'http://user:pass@11.22.33.44:8000',

]

def get_random_proxy():

return random.choice(proxy_list)

url = 'https://httpbin.org/ip'

for i in range(5):

proxy = get_random_proxy()

proxies = {

'http': proxy,

'https': proxy,

}

try:

response = requests.get(url, proxies=proxies, timeout=10)

print(f"[{i+1}] IP: {response.json()['origin']}")

except requests.exceptions.RequestException as e:

print(f"[{i+1}] Request failed with proxy {proxy}: {e}")

Option 2: Round-Robin Proxy Rotation

Instead of choosing proxies at random, you can cycle through them one by one. This is useful when you want to balance traffic evenly across all proxies.

import requests

from itertools import cycle

proxy_list = [

'http://user:pass@123.45.67.89:8080',

'http://user:pass@98.76.54.32:8080',

'http://user:pass@11.22.33.44:8000',

]

proxy_pool = cycle(proxy_list) # Creates an infinite iterator

url = 'https://httpbin.org/ip'

for i in range(5):

proxy = next(proxy_pool)

proxies = {

'http': proxy,

'https': proxy,

}

try:

response = requests.get(url, proxies=proxies, timeout=10)

print(f"[{i+1}] IP: {response.json()['origin']}")

except requests.exceptions.RequestException as e:

print(f"[{i+1}] Request failed with proxy {proxy}: {e}")

Delays & Retry Strategy

Rotating proxies helps, but it’s not a silver bullet. Some websites still use sophisticated techniques to detect and block automated traffic. To make your scraper more human-like and resilient:

- Add random delays between requests using

time.sleep()andrandom.uniform(). - Retry failed requests with backoff logic or by switching to another proxy.

- Limit concurrency to avoid overwhelming the site or triggering bot defenses.

Here’s a simple delay example:

import time

import random

# After each request

time.sleep(random.uniform(2, 5)) # Sleep between 2 and 5 seconds

Using SOCKS Proxies (Optional Advanced)

While HTTP and HTTPS proxies are the most common for web scraping, SOCKS (Socket Secure) proxies offer more flexibility. They can handle a broader range of traffic types – not just HTTP or HTTPS requests, but also FTP, SMTP, and more. SOCKS5, the latest version, adds support for authentication and offers better security than earlier versions.

Python’s requests library doesn’t natively support SOCKS proxies, but you can easily enable SOCKS support by installing an additional package.

1. Installation: requests[socks]

To use SOCKS proxies, you need to install the requests[socks] extra, which installs the necessary dependencies like PySocks. This will add SOCKS support to your requests library, allowing you to use SOCKS5 proxies seamlessly.

pip install requests[socks]

2. Example: Using a SOCKS5 Proxy

Once you’ve installed the required package, using a SOCKS5 proxy is just as simple as using an HTTP/HTTPS proxy. The only difference is in the proxy URL format. Here’s an example of how to use a SOCKS5 proxy with authentication – in this example, SOCKS5 is specified in the proxy URL and you can also include authentication (username and password) if your SOCKS5 proxy requires it:

import requests

# SOCKS5 proxy with authentication

proxies = {

'http': 'socks5://user:pass@123.45.67.89:1080',

'https': 'socks5://user:pass@123.45.67.89:1080',

}

url = 'https://httpbin.org/ip'

try:

response = requests.get(url, proxies=proxies, timeout=10)

print(f"IP: {response.json()['origin']}")

except requests.exceptions.RequestException as e:

print(f"Request failed: {e}")

3. SOCKS Proxy Variants

You can also use other SOCKS versions, like SOCKS4, by changing the proxy URL. However, SOCKS5 is typically recommended due to its added security and flexibility.

proxies = {

'http': 'socks4://user:pass@123.45.67.89:1080',

'https': 'socks4://user:pass@123.45.67.89:1080',

}

4. Advanced Configuration: Handling SOCKS Proxies with Failures

Just like with HTTP proxies, SOCKS proxies can occasionally fail (e.g., if the proxy is down, misconfigured, or overloaded). You can handle this by adding error handling and retry logic: For example, you could wrap the request in a try/except block to catch errors:

try:

response = requests.get(url, proxies=proxies, timeout=10)

print(f"IP: {response.json()['origin']}")

except requests.exceptions.RequestException as e:

print(f"Request failed with SOCKS proxy: {e}")

# Optionally, try a different proxy here

Common Mistakes to Avoid

Working with proxies in Python can save your scraping project – or break it entirely if you're not careful. Here are some of the most common mistakes developers make when using proxies with the requests library, and how to avoid them.

Forgetting 'https' in the proxies Dictionary

One of the most frequent errors is setting only the http key in the proxies dictionary. This can lead to confusing behavior where some requests ignore the proxy completely. Even if you're scraping only HTTPS websites, both keys must be explicitly defined for consistent behavior.

Incorrect:

proxies = {

'http': 'http://your_proxy_ip:port'

}

Correct:

proxies = {

'http': 'http://your_proxy_ip:port',

'https': 'http://your_proxy_ip:port',

}

Using Free Proxies

Free proxies might seem tempting, but they come with major downsides:

- Unreliable: They often go offline without notice.

- Slow: Free proxies are usually overloaded with traffic.

- Insecure: You’re routing your data through an unknown third party, which can pose serious privacy and security risks.

- Blacklisted: Many websites already block known free proxy IP ranges.

Stick with trusted proxy providers (like Infatica) that offer stable performance, support, and dedicated IP pools.

Ignoring Latency and Timeout Configuration

Not all proxies are equal in terms of speed. If you don’t configure a timeout, your script could hang indefinitely when a proxy is slow or unresponsive. Always set a timeout:

response = requests.get('https://example.com', proxies=proxies, timeout=10)

Additionally, consider monitoring response times and skipping proxies that regularly exceed a certain latency threshold.

Frequently Asked Questions

Yes, but not interchangeably. HTTP/HTTPS proxies are supported natively, while SOCKS proxies require installing requests[socks] via pip.

You can also learn more about:

SEO proxies are a must for scalable SEO tasks like keyword tracking, SERP scraping, and avoiding IP bans. Find out how proxies can boost your data collection efforts and keep your campaigns running smoothly!